Tax time is here again! For some of us, this means it's time to treat ourselves to something special.

Like a new high end GPU.

Introduction

Every now and then, something comes along that makes us glad that we're PC gamers. A product that reminds us of exactly how cool technology is and why we love to sink our hard earned into achieving the best possible gaming experience that money can buy.

Enter the GeForce GTX 980 TI. And we say this knowing that there is a higher end product called the Titan X that costs modernly more money for slightly better performance, all other things being equal, which fortunately they're not. It's a little more complex than that, too - but this benefits us, the consumer with a product that represents the best value high end PC gaming experience available.

Short for Titanium, this line of GPUs has long been the flagship moniker of GPUs from NVIDIA for those who want the absolute best performance. If you're impatient and you'd like to get on with the day, we'd just like to say "buy two of them" and enjoy the very best that PC gaming has to offer. We've had a bit of time to play with one (and for a short amount of time, two of them).

This product sits below the Titan X and above the rest. In fact, it's based on the same GM201 core that powers the Titan X, as opposed to a souped up GTX980 with a few differences which lead to no less than an 9% performance difference between the TItan X and GTX980 Ti, on paper, and even less difference in reality. This also means that the card carries the same 250W TDP as the Titan X which is significantly more power draw than the GM204-equipped 980 at 165W. So how could this possibly kill the Titan X and steal the performance crown?

The Gigabyte 980Ti Gaming G1 product

The review card is Gigabyte's top of the line gaming oriented product from their G1 Gaming series.

* Stock cooler with 1000MHz/1076Mhz base/boost clocks and a green "GEFORCE GTX" LED on the side of the card.

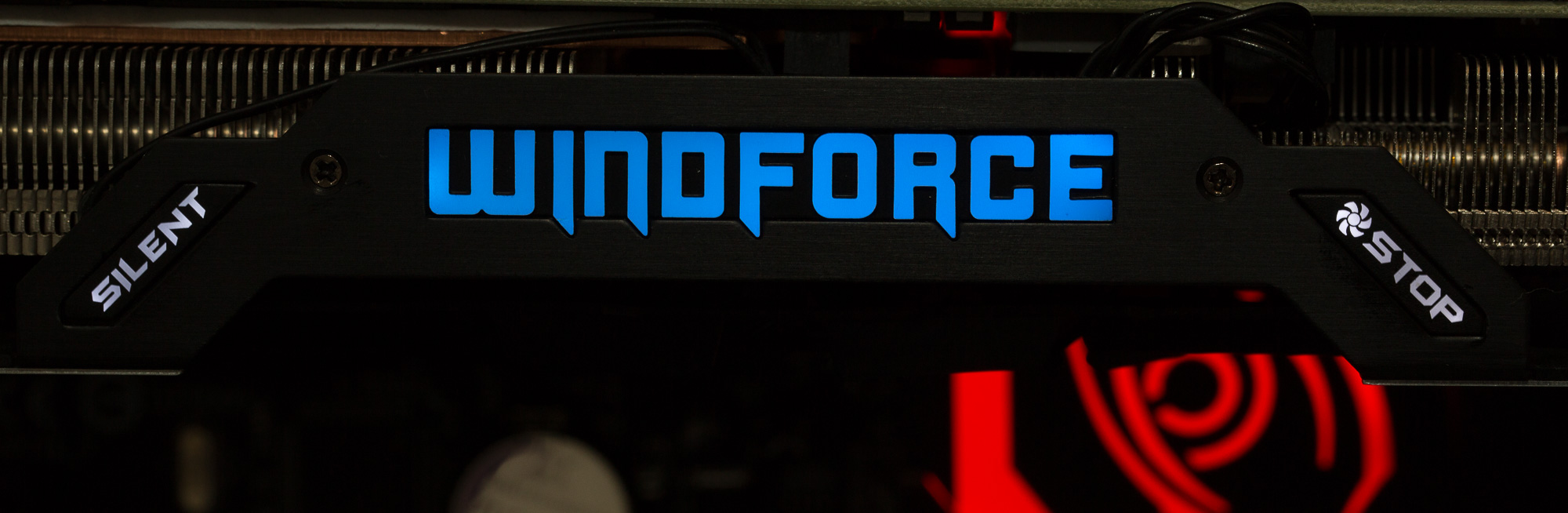

* G1 Gaming with a factory overclock of 1152MHz/1241MHz, a WindForce high performance cooler and multicolour LED WindForce Logo on the side of the card.

In case the boost frequency doesn't quite make sense to you, NVIDIA do a great job of explaining it on their website here:

http://www.geforce.com/hardware/technology...st-2/technologyTL;DR if your GPU is under utilised at your base clock rate, it can clock the GPU higher and safely within the thermal and power limits of the GPU to get the best performance. Boost clocks matter; even with GTA V smashing the GPU, we found it to be sitting at its boost clock almost the entire time of playing at full detail.

Aesthestics matter - the G1 Gaming GTX980 vs the GTX980Ti.

Aesthestics matter - the G1 Gaming GTX980 vs the GTX980Ti.

When purchasing the predecessor to this card, I was dismayed that I couldn't get my hands on a 980 with a reference cooler. I wasn't buying that card for performance overclocking; I was buying it for looks and I wanted the text to say "GEFORCE GTX" rather than "WindForce". The stock reference cooler looks very attractive, albeit a bit behind the times in terms of cooling performance. While sufficient for the GPU, the WindForce cooler is quieter at load and provides 600W of heat dissipation, so the WindForece cooler offers plenty of performance for overclocking.

With both the 980 and 980Ti side by side, we see that we have both the metal reinforced WindForce fan assembly but with silver accents on the Ti to set it apart. We now see the "silent / stop" buttons that were introduced with the GTX960, where the WindForce logo will shut off, illuminating the buttons either side of the logo instead to show that the card is in silent mode.

In silent mode, the fans do not spin at all, resulting in silent operation when you're not gaming, or running applications or games that don't place a high demand on the GPU. Duck Game is one such game, but let's face it - you're not going to be buying this card for 2D platformers.

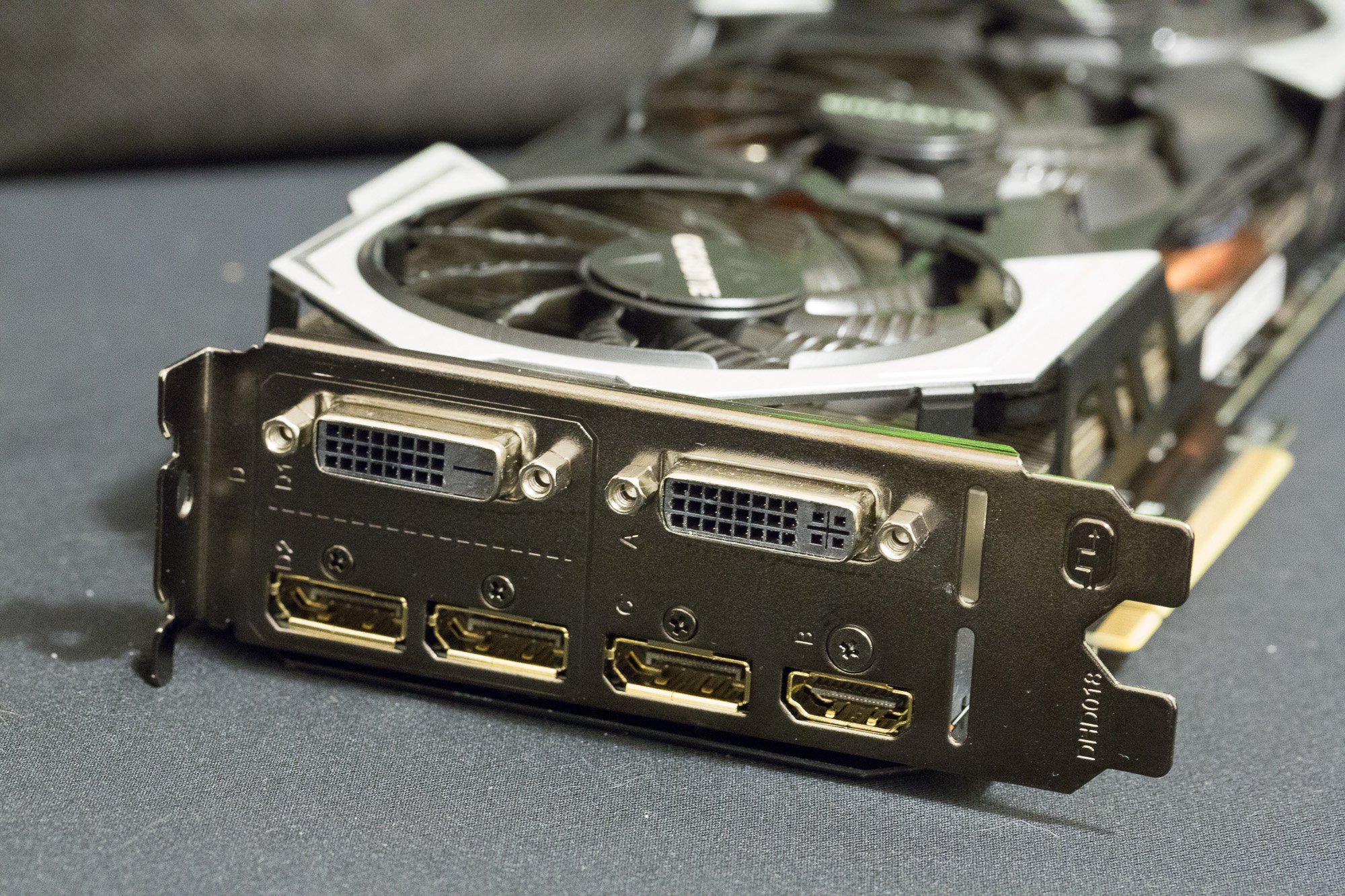

You'll also notice the presence of two 8 pin PCI-E power connectors. The reference design mandates one 6 pin and one 8 pin on the card; two 8 pin inputs permit for higher overclocking headroom and more stable operation under demanding conditions, a luxury option offered by higher end custom designed cards such as the Gaming G1.

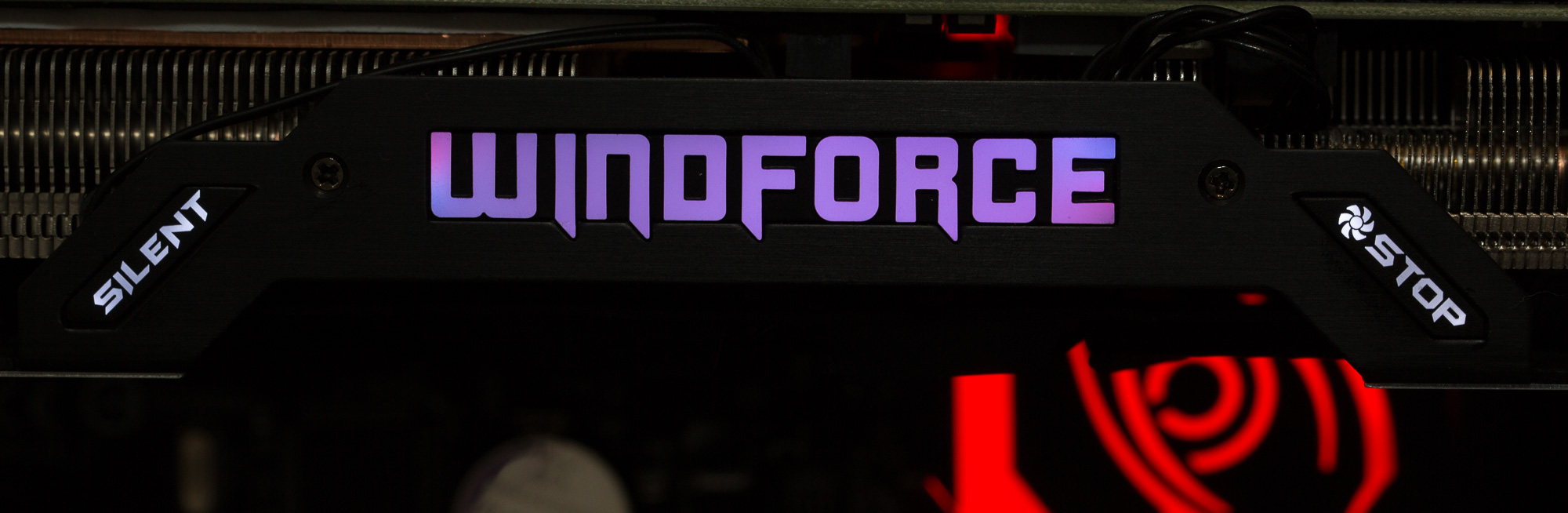

One other big change is how the WindForce LED logo operates. Up until now, the WindForce logo was a solid deep blue colour, which could make colour matching in case builds a challenge, in fact there were many system builders and modders who would choose a brand of GPU based on how it would look inside their design. Fortunately, Gigabyte now allow us to change this LED to one of seven colour combinations:

Unfortunately, there was one problem; the white colour comes in at a very faint light blue colour. White system builders take note - this will be obvious when putting together a system, however this shouldn't cause any issues otherwise but it is worthy of note. The other colours are reasonably accurate to their box and application designation; the logo is controller from within Gigabyte's OC GURU app, which is also used for overclocking.

Otherwise, case modders rejoice! In our test system, setting the LED to red matched the red colour of the G1 Gaming X99 motherboard it was installed in.

Oh, these look great when you SLI, them, too - and you can set each card to its own individual colour.

Drivers and the GeForce Experience.

NVIDIA have built a solid reputation compared to their competitors for releasing reliable and tested driver versions and on the odd occasion when a bug slips out, to have a fix out rapidly and quickly. NVIDIA deliver some great additional features through an applet they call the GeForce Experience, a tool used to maintain driver software, modify settings on the GPU such as LED settings and downloading of optimised gaming profiles that will set your in-game settings to NVIDIA's tested configuration that matches your PC hardware configuration including GPU type and display resolution.

G SYNC and why it matters a little more than we thought..

G-SYNC is a wonderful technology that makes sense and we'd like to know where it's been all these years.

A graphics card renders frames in wholes. In our tests, at 2560x1600 at full detail with a pair of GTX980 Sli cards, we'd see between 30 and 60 frames a second but would hover around 40-80 frames per second.

Displays update statically at a set framerate per second. This has an obvious problem; the graphics card and display are not synchronised. This means that while the display is receiving and processing the new frame, the GPU delivers the next render mid-scan, resulting in an image that has disparity between the top and bottom and a visible line in the middle. During fast periods of movement, this is something we call tearing; it appears as if the image is torn in half, and the line moves randomly between frames, resulting in distracting gameplay and delayed reaction times.

The solution was V-SYNC, or Vertical Sync, to synchronise the vertical scan rate so that the GPU would render a frame when the screen is ready, then render the next one. This pegs the GPU at the maximum framerate that the display can support at the best of times, so for a 60Hz display, you won't see your frame rate counter exceed 60FPS.

If the framerate falls below 60Hz? You'll half the framerate instantly because you'll have to wait for the next screen update cycle to display it. Bam, 30FPS and you'll learn to like it.

Of course, we know rendering frames in games is variable depending on the detail in the scene and this changes rapidly, so you'll be limiting your GPU performance significantly.

So, these are digital displays and digital graphics cards, so why not synchronise the two?

Well, the problem has been that up until now, there have been no standards for this. NVIDIA teamed up with several display manufacturers to create G-SYNC, a gaming-optimised mode that statically updates your display at a variable framerate, anywhere up to 144FPS on some 144Hz-capable displays. For a high end GPU such as a 980Ti, this is critical in protecting that investment. This means that 50FPS will be 50FPS and if that jumps to 70Hz, then you'll see 70Hz... all without any tearing or flicker.

Even if you're running at 1080p, having the capability to render all the way up to 144FPS gives you a competitive advantage as you'll see frames before your enemies do and a GPU such as the 980TI has plenty of grunt to render at those high framerates.

Suffice to say, I have never been so frustrated with a traditional 60Hz display.

Performance and 4K gaming

Performance and 4K gaming

NVIDIA are pitching this card at the 4K gaming market; gaming at resolutions of either 3840x2160 or 4096x2160. We had a chance to play with two of these GPUs, in SLI, and we threw a few games at them in the following system:

The LG panels, by the way, are gorgeous.

Grand Theft Auto V has been all the rage for graphics detail lately. I've spent a lot of time with the game on the 980, having to compromise on the very highest end of detail because the 4GB of video memory was insufficient to provide the required performance. The good news is that the GTX980Ti resolves this issue with 6GB of GDDR5 memory. Memory won't be your limit at that resolution, however at 4k, the required memory exceeded the 6GB of the card. This is perhaps the first use-case of a Titan X that we've ever seen.

Granted, it's still playable at 4k. And the difference between 2560x1600 and 4k is astounding even with the same detail settings in terms of how immersive the gameplay is. The level of detail will blow your mind in ways that just need to be seen to be believed and was still playable at 4k even with the sliders slammed to the right side. You'll probably be willing to take a small hit to the framerate for this level of detail.

Unfortunately, a single GPU will take a significant hit to framerate so you'll want to dial down the detail a touch to get that smooth 60fps+ gaming happening. Fortunately, SLI comes to the rescue.

Throwing any other game at this, including Tomb Raider in 4K resulted in absolutely no issues with performance to the point where we were wishing for 144Hz 4K displays to ship already.

You'll want to do that, by the way. The G1 Gaming cards are well picked - We had this one up to 1410 base clock and experienced no major issues during testing. ASIC quality was 70.9% according to GPUID.

Let's talk about how this card represents excellent value. Bear with me here - this is a $1099 card that generally won't have the word "value" anywhere near , however we see something very cool going on at the higher end of GPUs which we haven't seen before; near linear price/performance scaling, well, until you hit the $1500+ cost of a Titan X.

So why buy a Titan X?

Easy. If you need the 12GB of video memory. The reduction in CUDA cores, texture units and streaming multiprocessors won't matter to almost everyone. The memory will, if you're playing next generation 4K games with every single slider set to the right hand side. So there is your justification.

Oh, and if you really, really hate money for some reason.

So why buy a 980Ti?

Because, everything else.Any negation in performance is quickly made up by the factory overclock of the G1 which can be pushed further, so you're getting Titan X performance for less money. Period.

This product embodies everything about being a PC gamer. You'll be left thinking "Gosh, I really am the superior type of gamer" as you smash through your super high detail games at 4K resolution.

This card fits nicely into the NVIDIA product line in both price and performance, which tells us that their marketing team is doing the right thing here. For those who are power (or cash) constrained, the GTX980, at 20-25% of the performance and 20-25% less cost still represents great value. The Titan X looks a bit out of place here, but it's a great choice if you require more than 6GB of video memory and, of course, the bragging rights of owning a Titan X; those black reference coolers are unique and they look incredible in SLI in an all-black PC build. The G1 Gaming card also comes in at twice the price of a GTX970 and approximately twice the performance, making it a worthy alternative to a pair of GTX970 cards in SLI with the benefit of additional video memory.

In short, treat yourself. There's some great games out there and around the corner and the GTX 980 Ti Gaming G1 is the best way to experience them.